September 30, 2024

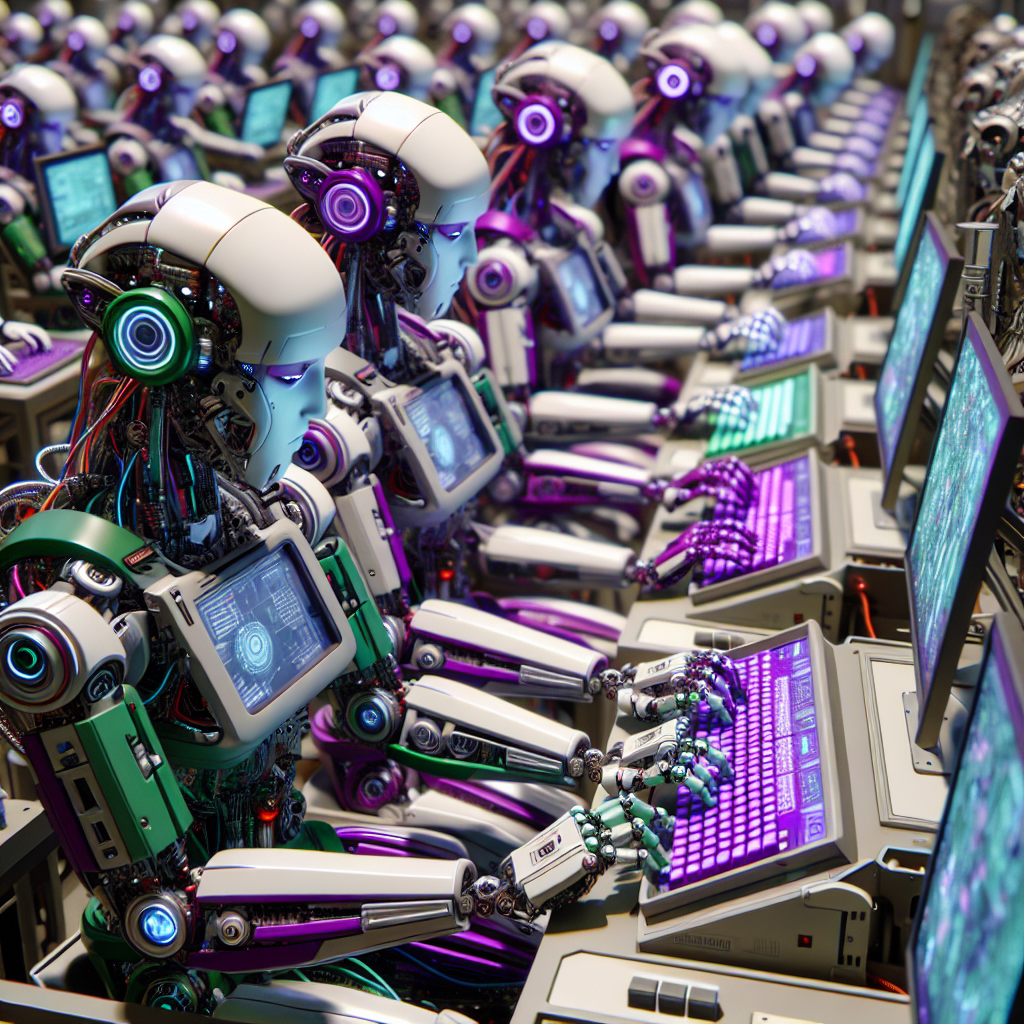

The Power of Generative AI for Content Moderation

Book a Demo

The rapid growth of the internet and digital communication has brought many benefits, but it has also introduced significant challenges, particularly in keeping online environments safe. Multi-Agent AI, which uses multiple generative agents, offers a promising solution by analyzing and flagging inappropriate or harmful content in real-time.

Limitations of Traditional Content Moderation

Traditional content moderation methods often rely heavily on human moderators and simple algorithms. These approaches can be slow, inconsistent, and prone to human error. Human moderators can experience burnout due to the constant exposure to harmful content, while basic algorithms may miss nuanced or emerging threats. This makes it difficult to maintain a safe and welcoming online environment at scale.

Human moderators, despite their best efforts, are often overwhelmed by the sheer volume of content that needs to be reviewed. This can lead to delays in identifying and removing harmful content, allowing it to spread and potentially cause harm before action is taken. Furthermore, the emotional toll on human moderators who are constantly exposed to graphic, violent, or otherwise disturbing material cannot be understated. This can lead to high turnover rates and a constant need for training new moderators, which is both time-consuming and costly.

Simple algorithms, on the other hand, lack the sophistication needed to understand context. For instance, an algorithm might flag a post containing certain keywords as harmful, even if it is being used in a non-offensive or educational context. Conversely, more subtle forms of harmful content, such as coded language or emerging threats that have not yet been identified, can slip through the cracks. This inconsistency can undermine the credibility of the moderation process and the overall safety of the platform.

What is Multi-Agent AI?

Multi-Agent AI involves multiple autonomous agents working together to achieve complex tasks. Unlike single-agent systems, this approach is more robust and scalable, making it ideal for dynamic platforms like social media platforms. Each agent operates semi-independently but collaborates with the others to improve efficiency and accuracy.

By breaking down complex problems into smaller tasks, Multi-Agent AI systems can handle various challenges more effectively. For example, one agent may specialize in sentiment analysis, another in image recognition, and yet another in spam detection. By sharing their findings, these agents perform a comprehensive analysis, improving the system’s resilience and adaptability to new content and threats.

The collaborative nature of Multi-Agent AI allows for a more nuanced and thorough approach to content moderation. Each agent can leverage its specialized expertise to identify specific types of harmful content, and then share this information with the other agents. This creates a network of knowledge that can be used to improve the overall accuracy and effectiveness of the system. Additionally, the semi-independent operation of each agent allows the system to continue functioning even if one agent encounters an issue, ensuring continuous and reliable moderation.

The Role of Generative AI in Content Moderation

Generative AI agents are a special type of AI that can create new data based on patterns they’ve learned. In content moderation, these agents can generate synthetic harmful content to improve training algorithms. They can also simulate user behavior to predict and identify risks. This helps the system recognize and respond to various forms of harmful content, from hate speech to misinformation.

For instance, generative AI agents use techniques like Generative Adversarial Networks (GANs) to create realistic examples of offensive content. By exposing the system to a wide range of harmful material, these agents improve its ability to detect subtle and emerging threats. This proactive approach enables the system to not only identify known harmful content but also adapt to new forms as they arise.

Generative AI can also be used to create a diverse range of training data that includes various forms of harmful content that may not be present in existing datasets. This can help address the issue of bias in AI algorithms by ensuring that the system is trained on a more representative sample of content. Additionally, generative AI can simulate the behavior of malicious users, allowing the system to anticipate and counteract new tactics that may be used to spread harmful content.

Real-Time Analysis and Flagging

Real-time analysis is essential for effective content moderation. Multi-Agent AI systems use techniques such as natural language processing (NLP) and computer vision to examine content as it is uploaded. These systems can flag questionable material in milliseconds, enabling human moderators to review and take action quickly. This real-time capability is crucial for platforms with high user activity, where harmful content can spread rapidly.

Through continuous monitoring of user-generated content, NLP allows the system to interpret text and identify harmful language, while computer vision scans images and videos for inappropriate material. Once flagged, the content is prioritized for human moderation, ensuring swift responses. This capability is especially important for live-streaming platforms and social media platforms, where harmful content can have an immediate and widespread impact.

The ability to analyze and flag content in real-time is particularly important for preventing the spread of harmful content during live events. For example, during a live-streamed event, harmful content can be broadcast to thousands or even millions of viewers in a matter of seconds. Real-time analysis allows the system to detect and flag this content immediately, preventing it from reaching a wider audience and potentially causing harm. This is especially critical for platforms that cater to younger audiences, who may be more vulnerable to the effects of harmful content.

Creating Safer Online Spaces

The main goal of using Multi-Agent AI in content moderation is to create safer online spaces. By efficiently detecting and mitigating harmful content, these systems protect users from exposure to offensive material. This proactive approach not only enhances the user experience but also ensures compliance with community guidelines and platform’s community guidelines, fostering trust and reliability in digital platforms.

Creating safer online spaces is not just about removing harmful content, but also about fostering a positive and inclusive environment for all users. Multi-Agent AI can help achieve this by identifying and promoting positive content, as well as flagging and removing harmful material. For example, the system can prioritize content that promotes healthy discussions and respectful interactions, while filtering out content that is likely to cause harm or offense. This can help create a more welcoming and supportive community, where users feel safe and valued.

Mitigating Biases and Fairness Issues

Despite its advantages, Multi-Agent AI faces challenges. Ethical concerns, such as bias in AI algorithms and the risk of over-censorship, remain significant issues. Future development will need to focus on improving the transparency and accountability of these systems, as well as exploring advanced technologies like blockchain for better traceability. Continuous research and innovation are critical to addressing these challenges and adapting to the ever-changing landscape of online communication.

One of the key challenges is preventing AI algorithms from perpetuating biases or introducing new ones. This requires diverse training data and fairness-aware algorithms. Another issue is balancing moderation and free speech, avoiding over-censorship that could stifle legitimate expression. Future efforts may focus on hybrid systems combining AI with human expertise to ensure both fairness and efficiency. Additionally, technologies like blockchain could make moderation decisions more transparent, giving users clearer insights into why their content was flagged or removed.

Addressing biases in AI algorithms is a complex and ongoing process. It requires a commitment to diversity and inclusion at every stage of development, from the selection of training data to the design and testing of the algorithms. This includes actively seeking out and incorporating feedback from diverse user groups, as well as regularly auditing the system to identify and address any biases that may emerge. Additionally, transparency and accountability are crucial for building trust with users. This means providing clear explanations for moderation decisions and allowing users to appeal or challenge those decisions if they believe they were made in error.

The Future of Content Moderation

As technology evolves, the future of content moderation will likely see a blend of AI and human oversight. Advanced AI systems will handle the bulk of real-time analysis and flagging, while human moderators will make nuanced decisions that require contextual understanding. This hybrid approach aims to balance efficiency and fairness, ensuring that moderation practices are both effective and just.

The future of content moderation will likely see the development of even more sophisticated AI systems that can handle increasingly complex and nuanced forms of harmful content. This may include the use of advanced machine learning techniques, such as deep learning and reinforcement learning, to improve the system’s ability to understand context and make accurate decisions. Additionally, the integration of AI with other emerging technologies, such as virtual and augmented reality, could open up new possibilities for content moderation in immersive digital environments.

Multi-Agent AI is revolutionizing content moderation, offering enhanced capabilities for real-time analysis and flagging of harmful content. While this technology promotes safer online spaces, ongoing efforts are needed to address its challenges and ensure its ethical use for broader applications.

Connect with our expert to explore the capabilities of our latest addition, AI4Mind Chatbot. It’s transforming the social media landscape, creating fresh possibilities for businesses to engage in real-time, meaningful conversations with their audience.