April 11, 2023

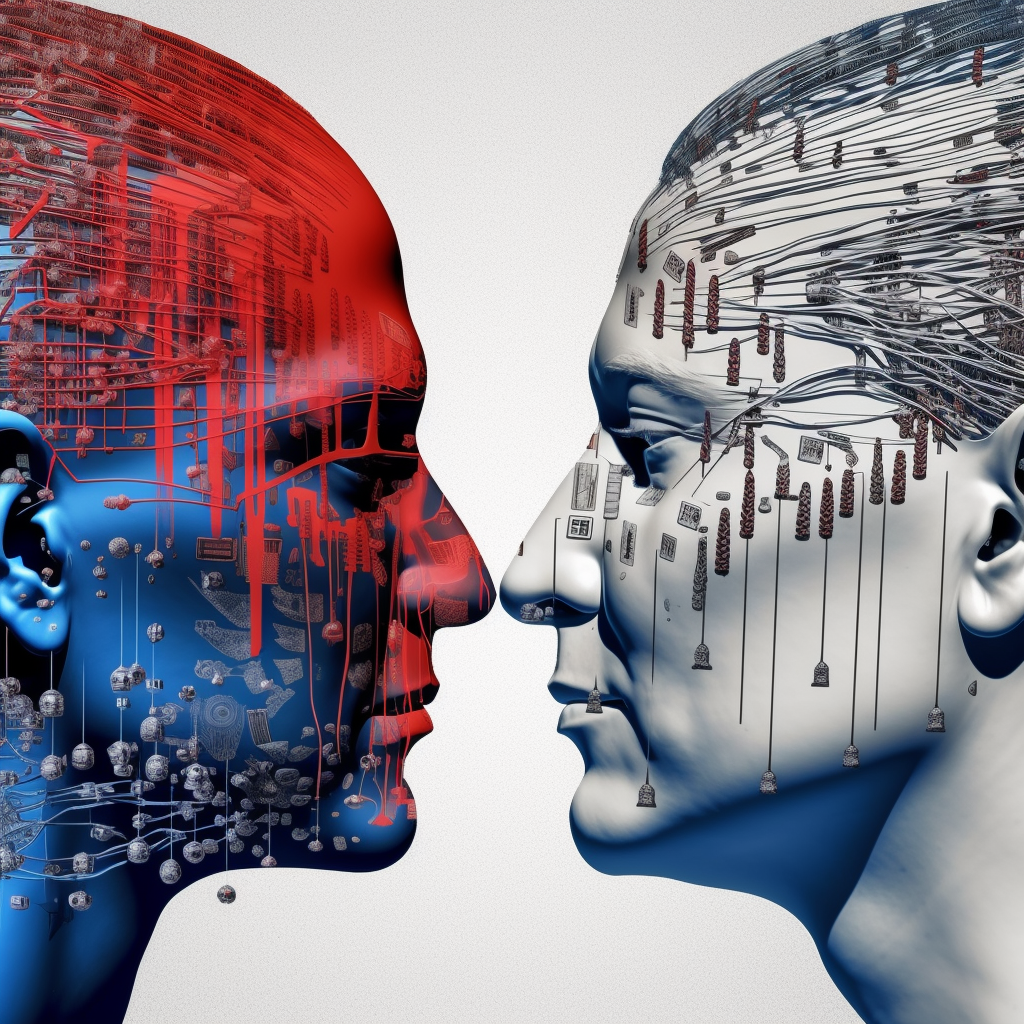

The Dangers of AI Bias in Today’s Political Climate

Book a Demo

In recent news, ChatGPT, an AI program that generates text based on prompts, falsely accused law professor Jonathan Turley of sexual assault. The fake source that ChatGPT created caused Turley to feel shocked and concerned about the damaging allegation, describing the situation as “quite chilling”.

This incident highlights the potential bias and danger of AI programs like ChatGPT in today’s political climate. The reason behind the false accusation of Turley was the AI program’s use of fabricated sources and a phoney 2018 Washington Post article.

UCLA’s Eugene Volokh discovered the fake accusation when researching ChatGPT’s accuracy in citing scandals involving American law professors accused of sexual harassment. Microsoft’s Bing, powered by GPT-4, repeated the false story when investigated by The Washington Post.

Attempts to replicate the responses on ChatGPT and Bing were met with either refusing to answer or repeated claims about Turley. This highlights the need to improve AI-generated information’s factual accuracy and reliability.

The incident involving Turley emphasizes the importance of exercising caution when using AI programs that generate text, as they may not always be accurate or reliable in their outputs. It also raises concerns about the potential for AI programs to be manipulated for political or personal gain, which could have severe consequences for individuals and society as a whole.

The false accusations made against Jonathan Turley by ChatGPT serve as a warning of the potential risks associated with the use of AI-generated information. While these technologies have the potential to be incredibly useful, it is crucial to ensure their factual accuracy and reliability to prevent harm to innocent individuals and the wider society.