June 12, 2023

ChatGPT and the Challenge of Fake Case Law – Lawyers Speak Out

Book a Demo

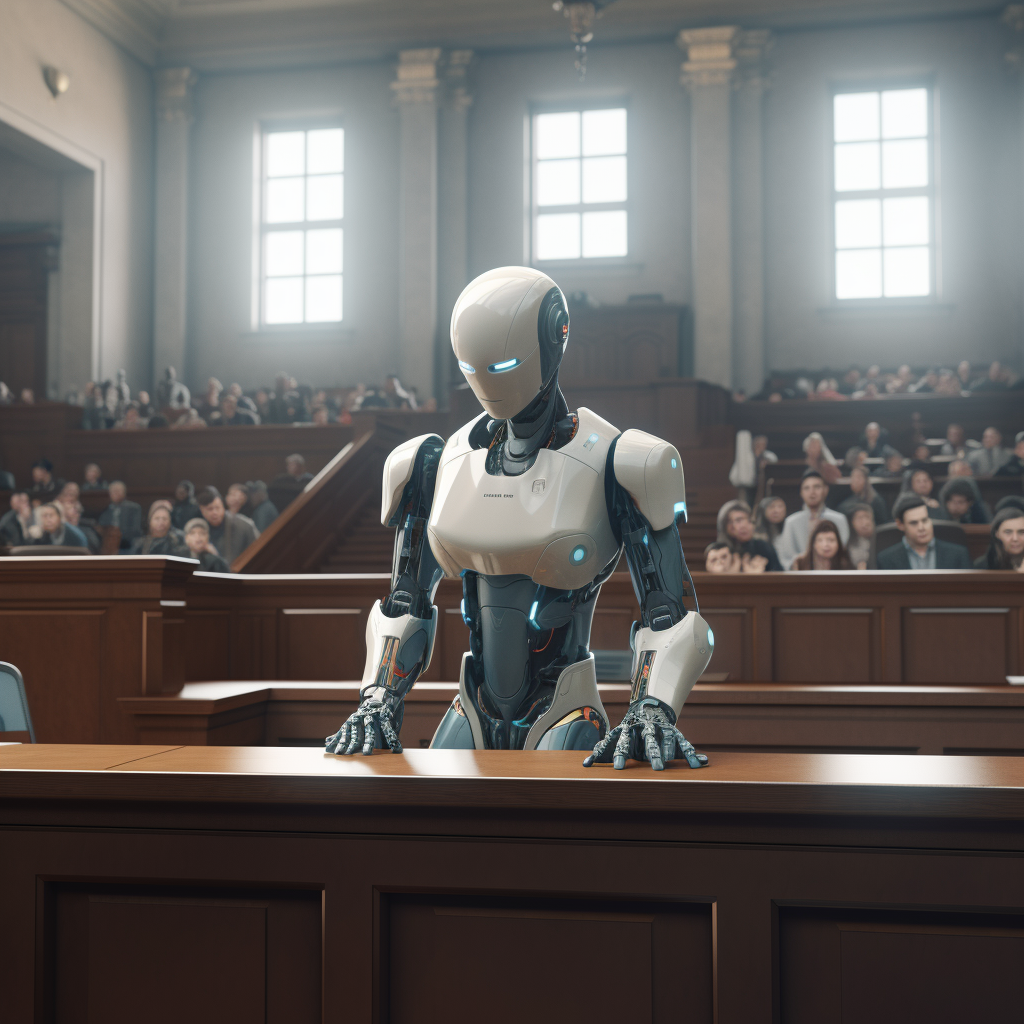

The emergence of AI-powered language models, such as ChatGPT, has revolutionized various industries. However, recent reports have shed light on an unexpected challenge faced by lawyers: being tricked into citing fake case law. Lawyers express their concerns and frustrations regarding the unintended consequences of relying on ChatGPT for legal research. Let’s delve into the details of these articles and explore the implications of this issue.

Lawyers are blaming ChatGPT for leading them to cite bogus case law. The sophisticated language model, while highly capable, can sometimes generate fictitious legal precedents that appear convincing. This unforeseen challenge has prompted legal professionals to highlight the importance of human oversight and critical analysis in the research process. The article highlights specific cases where lawyers unknowingly referenced non-existent legal authorities, emphasizing the need for caution when utilizing AI-powered tools.

The concerns raised by lawyers. It dives deeper into the repercussions of relying solely on ChatGPT for legal research, emphasizing the potential for misinformation and its impact on the integrity of the legal system. Lawyers express their frustration at the lack of accountability of AI models like ChatGPT, urging developers to address the issue and enhance the accuracy and reliability of AI-generated legal information.

The broader debate surrounding the use of AI in the legal profession. While AI-powered tools can provide efficiency and convenience, they also pose challenges when it comes to accuracy and accountability. Legal experts emphasize the importance of regulation to ensure transparency and reliability in AI-generated legal information. Additionally, they stress the irreplaceable value of human expertise and critical thinking in the legal research and decision-making process.

The unintended consequences of ChatGPT’s impact on legal research, as discussed in these articles, underscore the importance of responsible AI adoption and human oversight. While AI-powered tools like ChatGPT can be incredibly useful, the potential for generating fake case law highlights the need for caution and critical analysis. It is imperative for developers, legal professionals, and regulators to collaborate in addressing this challenge, enhancing the accuracy and reliability of AI-generated legal information. By striking a balance between AI assistance and human expertise, the legal profession can navigate the complexities of AI technology while upholding the integrity of the legal system.