MIT researchers have devised a method that detects inputs called “adversarial examples” that cause neural networks to misclassify inputs, to better measure how robust the models are for various real-world tasks.

Sourced through Scoop.it from: news.mit.edu

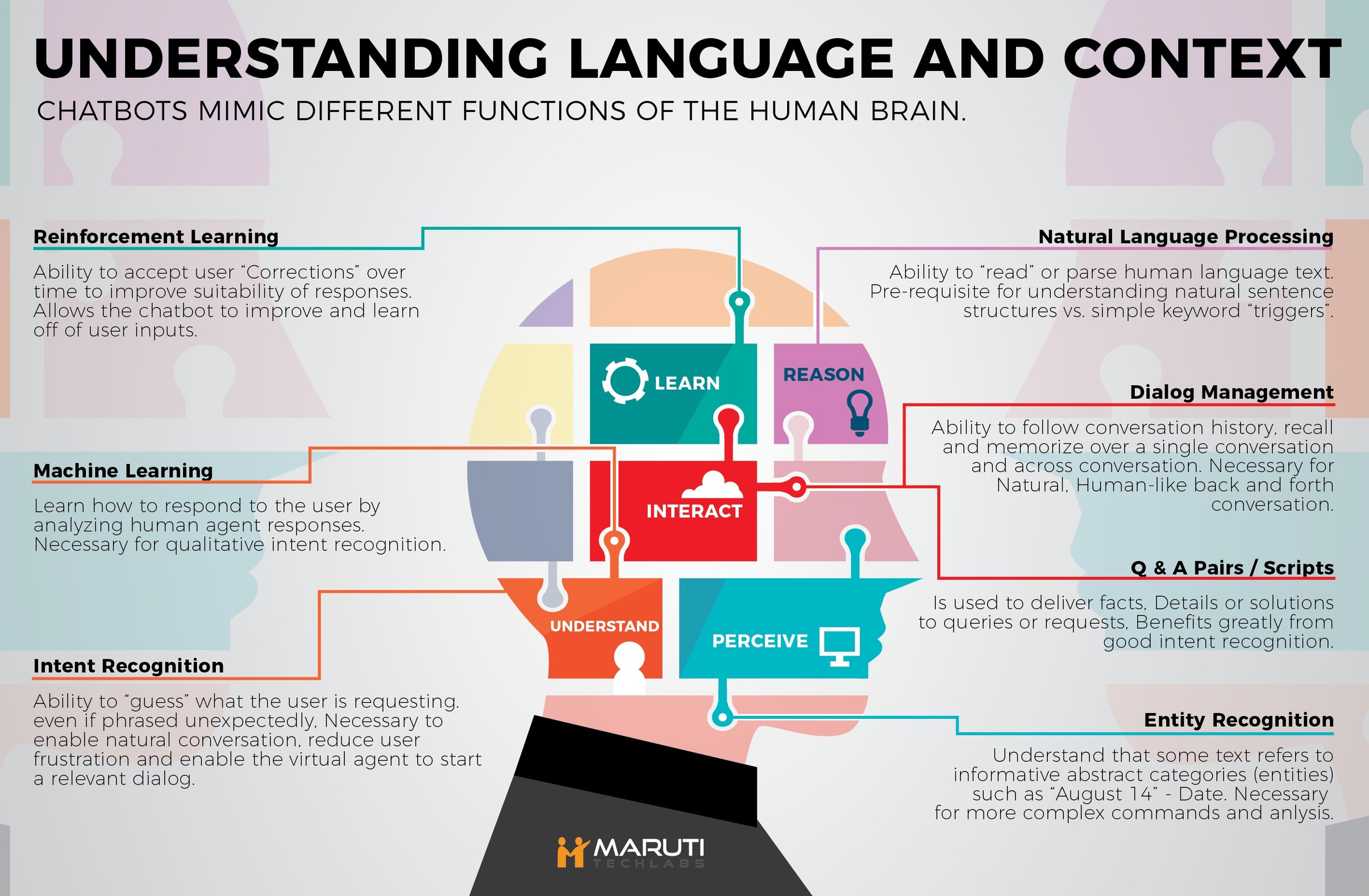

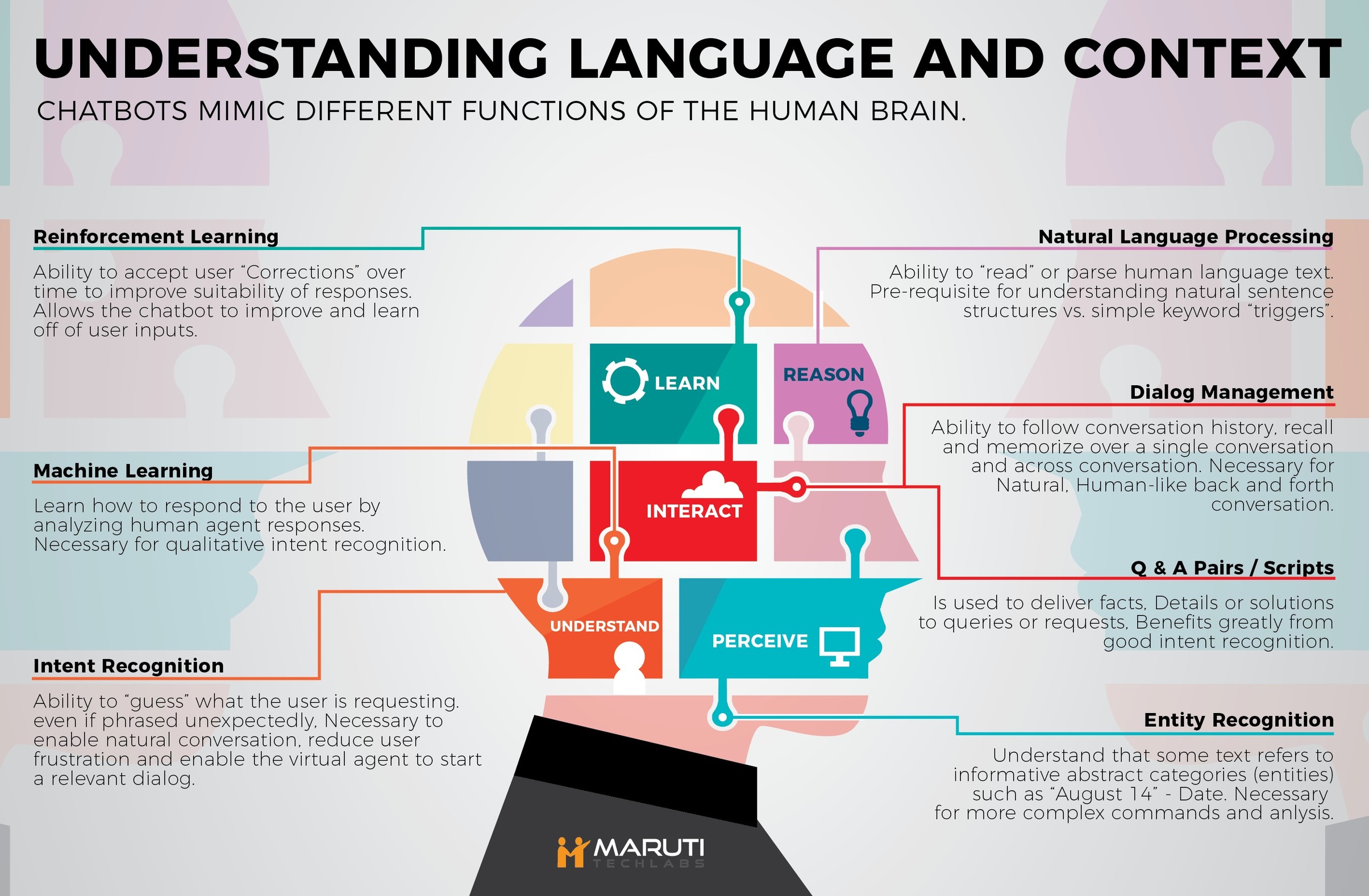

Machine learning uses algorithms to turn a data set into a predictive model. Which algorithm works best depends on the problem

Sourced through Scoop.it from: www.infoworld.com

Comforte AG's Anna Russell explains why organizations should be doing more to protect their valuable data.

Sourced through Scoop.it from: www.techradar.com

Sourced through Scoop.it from: customerthink.com

The demand for machine learning engineers continues to grow. Tom Merritt lists five skills necessary to land the job.

Sourced through Scoop.it from: www.techrepublic.com

Machine learning and artificial intelligence took the spotlight as 10 companies showcased the latest in technology that is disrupting the transportation and logistics worl

Sourced through Scoop.it from: www.benzinga.com

AI and machine learning combined with ever-increasing amounts of data are changing our commercial and social landscapes. A number of themes and issues are emerging within these sectors that CIOs need to be aware of.

Sourced through Scoop.it from: www.cio.com

Ahead of its Build conference, Microsoft today released a slew of new machine learning products and tweaks to some of its existing services. These range from no-code tools to hosted notebooks, with a number of new APIs and other services in-between. The core theme, here, though, is that Microsoft is continuing its strategy of democratizing […]

Sourced through Scoop.it from: techcrunch.com